The term “AI agent” is currently being used as an inflationary label for almost anything that delivers reasonable answers in a chat window. This not only causes confusion but often leads to false expectations in companies. The result is frequently projects that deliver eloquent text but barely measurably relieve day-to-day work.

To choose the right architecture for a company, you need to understand the fundamental difference:

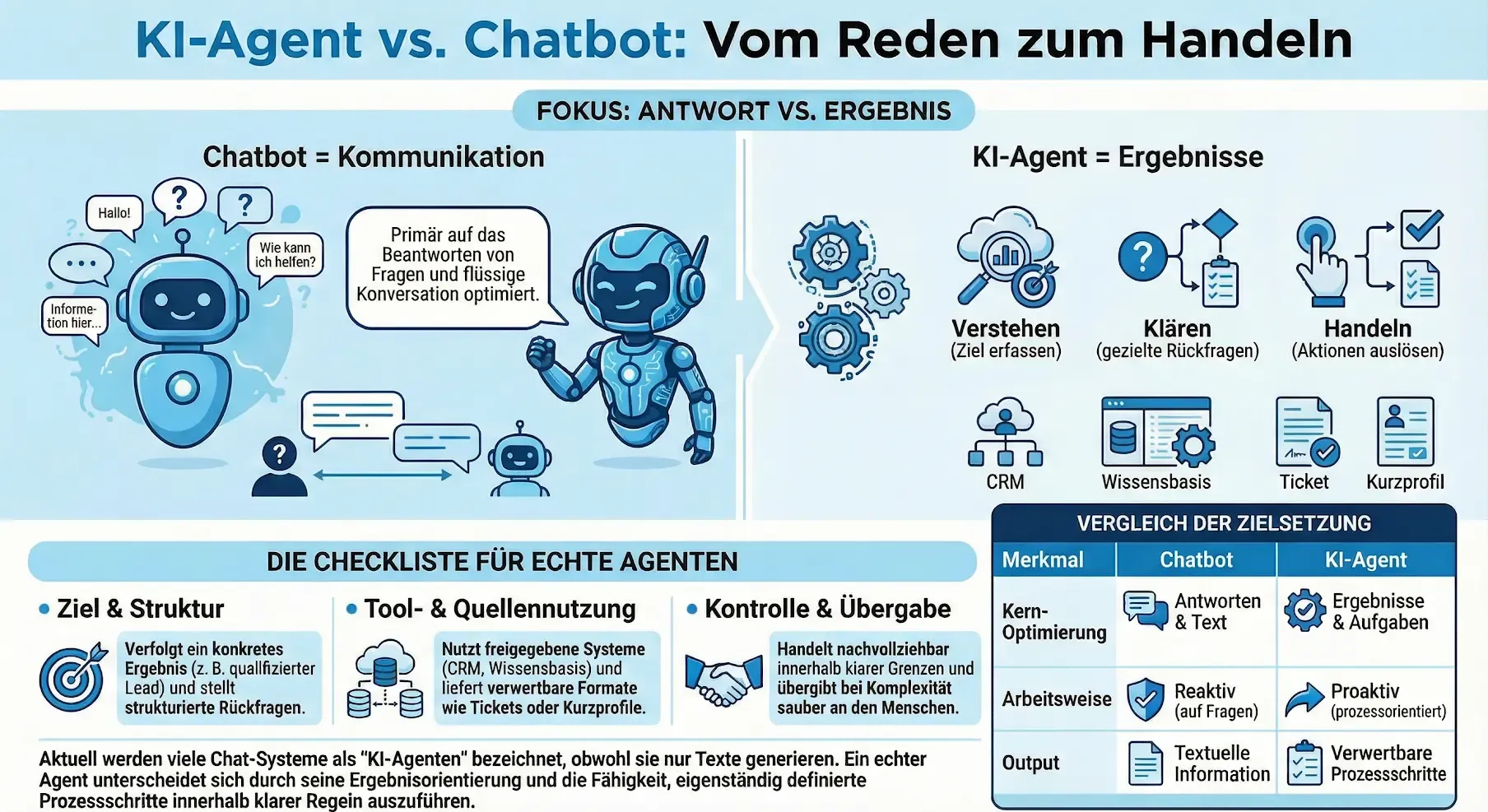

Short definition: A chatbot answers questions. An AI agent drives a process to an outcome.

An agent gathers context, asks targeted follow-up questions, and triggers defined steps within clear rules — including a clean handover when a human needs to take over.

Truth 1: Outcomes Instead of Answers

A classic chatbot is trained to conduct a conversation. An agent, on the other hand, is optimized to “get things done.” The goal is not the dialogue itself, but completing a task, such as:

- Resolving a complex inquiry

- Pre-qualifying a lead

- Preparing a support ticket

- Defining the next logical process step

Truth 2: Acting Within Rules

For an AI model to work reliably, a real agent doesn’t operate in a vacuum but executes defined steps. It doesn’t hallucinate solutions — it works through checklists:

- Missing information? → Request parameters

- Data needed? → Compile information from approved sources

- Does the user need to know something? → Provide documentation

- Is the case too complex? → Trigger handover to the right person

- What happens next? → Initiate follow-ups

The 2-Minute Check

How can you tell whether you’re dealing with a real agent system or a chatbot with a new coat of paint? We use a checklist with 7 characteristics.

If several of the following points are missing, it’s usually just a chatbot. Real agents offer:

- Goal orientation (focus on completion rather than conversation)

- Structured follow-up questions (targeted collection of missing information)

- Tool usage (access to approved sources/APIs)

- Actionable output format (e.g., JSON, brief profile, ticket)

- Clean handover (context transfer to human/system)

- Traceability (source references, rule references)

- Controllability (clear boundaries and escalation paths)

A Real-World Scenario

The difference becomes clearest when both systems are given the same request. Scenario: A prospect writes: “We produce 5,000 parts per shift in a dusty environment — which solution fits?”

A chatbot draws on general training knowledge and broadly names various options or lists products that could theoretically fit. The user is left alone with a list.

The agent recognizes the intent (purchase consultation) and checks the parameters. It determines: “dusty” and “quantity” are present, but the material type is missing.

It specifically asks for the missing material, narrows down options based on the database, and provides matching technical data sheets as downloads.

Finally, it creates a brief profile of the prospect and hands it over (including the technical constraints) to the responsible sales representative.

Avoiding Common Project Mistakes

Why do many initiatives fail even though the technology is ready? It usually comes down to three avoidable mistakes:

- Starting too broadly: Too many exceptions and too little proof of concept.

- Without rules & sources: The system delivers pretty answers but no reliable relief.

- Without measurement: There’s no proof of efficiency and therefore no basis for scaling.

Our Perspective at P-CATION

At LIVOI, we view agents not as a short-term trend but as a fundamental architectural question: How do we create relief in daily work without losing control?

For us, three principles count that go beyond the hype:

- Proof over claims: Does it work in real workflows?

- Control over black box: Clear rules, defined handovers, full traceability.

- Scalability over makeshift solutions: Processes must be repeatable, measurable, and extensible.