The Model Context Protocol (MCP) was developed to connect AI models with external tools. The basic idea is simple: a model should not only understand text but also be able to use tools that deliver data, perform calculations, or interact with external systems.

But as MCP grows, a fundamental problem is emerging — one that is not technical in nature, but structural. Many people only see the surface: tools work, the AI responds. But the real effort is hidden in the background.

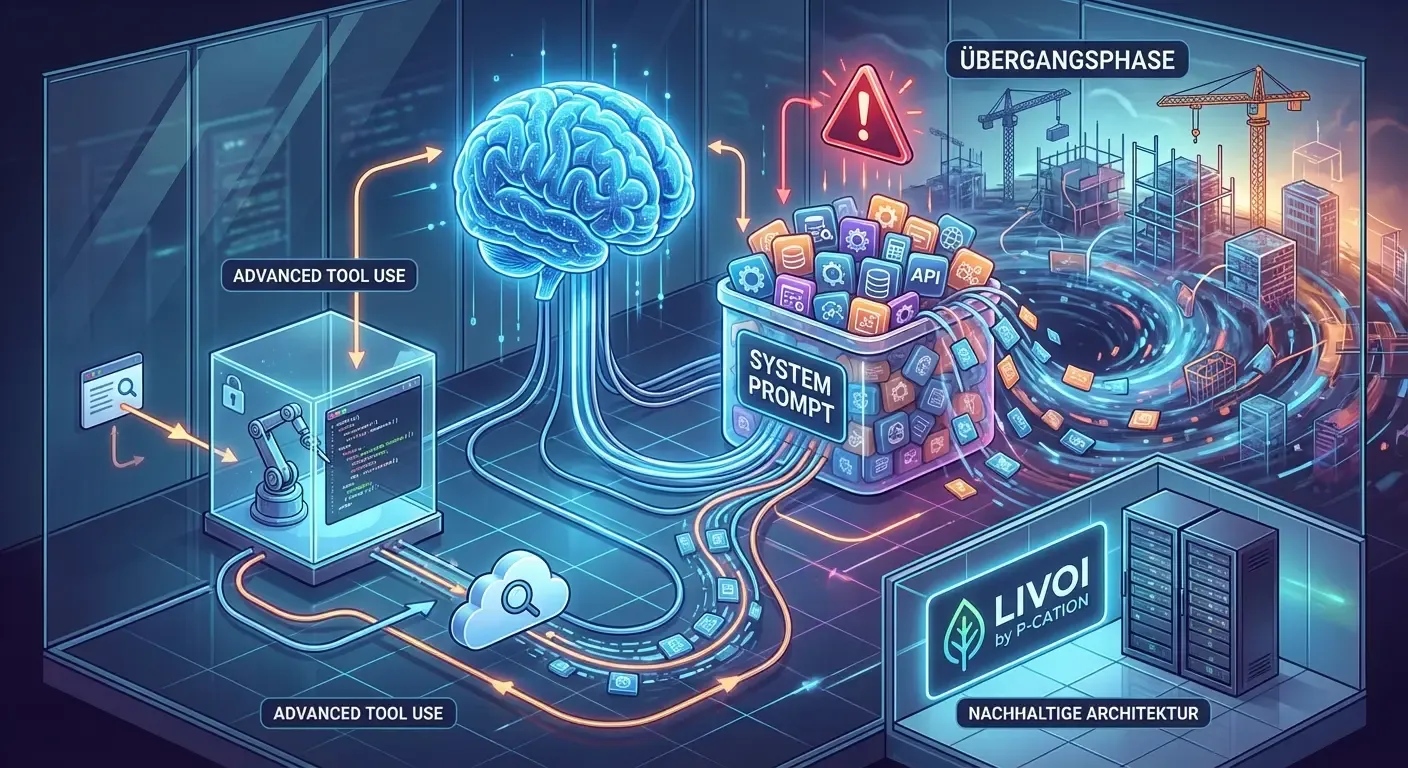

MCP appears elegant, yet it creates enormous overhead in the system prompt because tool definitions must always be sent along.

Why the System Prompt Overflows

An AI model only works reliably when it receives precise information. MCP relies on all tool descriptions, rules, and examples being written directly into the system prompt. This is exactly where the core problem arises:

The more tools a project uses, the more prompt space is required. The available context shrinks — and with it, often the quality of the responses.

Think of it like a bag you have to fill before every conversation. At first, it’s manageable. But soon it contains:

- the system instructions

- every single tool definition

- formatting rules

- usage examples

- technical constraints

…until there’s barely any room left for the actual conversation.

Another Often Underestimated Factor: Cost

Since MCP always sends all tool definitions with every single request, it’s not just the technical load that increases but also the price per request. More prompt tokens simply mean higher operating costs — regardless of whether a tool is actually needed in the conversation.

Especially with complex or extensive toolsets, this permanent baseline load can become a significant cost factor that heavily impacts scalability.

How the Industry Is Trying to Cope

New approaches are emerging that attempt to work around the growing complexity. One example is Anthropic’s concept of Advanced Tool Use. It shifts part of the MCP problem:

- The model receives only a small piece of information: “Tools exist.”

- The model writes code that runs in an isolated environment.

- This code calls a kind of tool discovery service.

- Only now do the full tool descriptions come into play.

- The AI receives the relevant data — but only after several intermediate steps.

This relieves the prompt but adds new layers to the architecture. Whether this will be simpler in the long run or just “differently complex” remains an open question.

A Transitional Phase

The entire AI tooling landscape is currently being restructured. MCP is neither wrong nor perfect — it is part of this transition. Much is just emerging now, and some things will look quite different in a few months.

The key takeaway is this: the AI world is still searching for stable standards. Experimentation is part of the process.

Our Perspective at P-CATION

For LIVOI, we rely on sustainable technical decisions. Sustainability for us also means choosing architecture that doesn’t need to be completely replaced with the next trend.

We are closely monitoring MCP and its alternatives — without jumping to conclusions. Because one thing is clear: the best solutions rarely emerge in moments of greatest upheaval, but when the dust has settled a bit.